Rapid iteration using Stable Diffusion

Stable Diffusion is a latent text-to-image diffusion model. The model takes a text input and converts that text into abstract representations of the underlying concepts, learnt through vast quantities of data. It then produces images using these abstract representations. Image-to-image generation is also supported, and it is this feature that will allow us to rapidly iterate when creating digital art.

Technologies using a similar approach to Stable Diffusion already exist, such as Google’s Imagen or OpenAI’s DALL-E. However these models do not have the same level of public availability as Stable Diffusion, which can be run at home by using either cloud compute or a local copy of the model. To run the model locally, a GPU with several gigabytes of memory is required. For context, I am using a GTX 1660 Super with 6GB VRAM. If your VRAM budget is limited, you can use OptimizedSD.

The inner workings of Stable Diffusion are fascinating and incredibly important, however they are beyond the scope of this article, which serves as a high level overview and demonstration of specific application of the technology.

Using Stable Diffusion in the creative process

Firstly, a confession: I am no artist. I lack the experience and creative skills required to distil my ideas into visual form. In fact, if you asked me to draw an image with the prompt “A view of planet Earth from the lunar surface”, I would proudly present you with the following:

A view of planet Earth from the lunar surface” human generated

Granted, the requisite high level components are present in the image: a meteor-pocked landscape, a starry vista of space and the small blue dot we call home. In fact, this image contains a minimal amount of information needed to represent the input prompt. However, it lacks the finer details such as shadows or an accurate depiction of Earth. It is devoid of any polish or beauty that would make it even vaguely artistic.

This is where Stable Diffusion really shines: we can use our low quality drawing as input to a model which will not just fill in the detail for us, but allow us to add new elements to our image, produce countless permutations of new ideas and recreate it in any style.

Often when using image generation tools, a great deal of thought goes into prompt engineering: choosing a text input that provides the output you are expecting. The image-to-image approach, by contrast, means there is little need to awkwardly force our imagination into words.

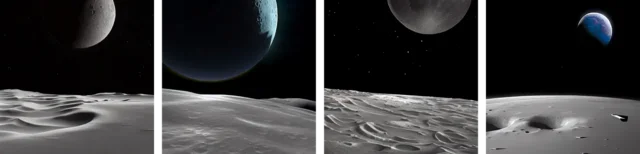

“The surface of the moon. Hyper realistic” strength=0.8, ddim_steps=30

The above image is generated by Stable Diffusion from our input. Using OptimizedSD with turbo enabled and 30 time steps, this output is generated on my fairly modest GPU in just over one minute.

Making adjustments to the generated output

The detail of the lunar surface in the above images is fantastic, however you will notice that in most of the examples, planet Earth has been converted into another moon! This is due to the creative liberty of Stable Diffusion, controlled by the ‘strength’ parameter. Reducing the strength value will produce an output closer to your input image, whereas a large strength value will allow the model to bring in new ideas and modify the input in surprising ways. Early in the creative process, I would encourage using a large strength value. To fix the generated image, I loaded the output image into my image editor and removed the extraneous moon. I then drew a very simple view of planet Earth from space, and passed this into Stable Diffusion to generate an output.

“A photo-realistic image of planet Earth from Space” strength=0.6, ddim_steps=30

This looks much more like what I had in mind. Doing this for key components in your image will give you much more control over the final result, and allows for a high level of detail that would require significant prompt engineering to achieve otherwise.

Taking the much more detailed planet Earth generated by the model, I opened my image editor once more and superimposed it onto my lunar landscape. The result is an image that accurately matches the vision I had in my head when I created my initial sketch, at a level of detail far beyond my ability.

The generated planet Earth over our generated lunar landscape

Iterating endlessly

We have now created a backdrop scene onto which we can add as much detail as we please by repeating the cycle of generating an image, superimposing it onto our scene and running the superimposed image through Stable Diffusion with a low strength and a descriptive prompt to smooth out any inconsistencies.

In this case, I want to add a lonely deck chair and parasol on the lunar surface. As before, I draw my basic sketch, this time onto my existing scene. Then, I export the sketch and feed it to Stable Diffusion, giving a uniform style and level of detail. In the leftmost image, you will notice that planet Earth is cropped abruptly. Running the image through Stable Diffusion resolves this in a similar way to how detail is added to our sketch element, allowing us to roughly paste in new content without wasting time tidying up smaller details.

“A detailed photo of a red and white deck chair on the moon under a parasol umbrella. planet earth is in the background” strength=0.7, ddim_steps=50

The result is a unique piece of art that represents what I was imagining in visual form, with minimal artistic ability. I could go on, adding new elements, tweaking the style — I could recreate this image as a renaissance painting or a crayon illustration. The possibilities are limitless.

The tools required to create the images used in this article have been developed over the past week by the community, since the release of Stable Diffusion. With such momentum, the future is bright for the applications of models such as Stable Diffusion outside of niche computer science and machine learning communities. As the tools become more accessible, they are exposed to people with new points of view, fresh ideas and talents. General availability of such a model is the catalyst for progress and innovation, and is what makes Stable Diffusion so exciting.